Have you ever noticed how Netflix changes the cover art for the same show? Or how your favourite shopping site quietly switches up button styles? That’s not a random design update —it’s A/B testing in action. Instead of relying on vibes or gut feelings, businesses run experiments to prove what actually works.

For data analysts, it’s the bread-and-butter skill that turns “I think” into “I know.” It’s proof that small experiments can lead to big wins —and that numbers beat vibes every time.

What is A/B Testing?

A/B testing is basically a choose-your-own-adventure experiment for businesses. You take two versions of something—say a website page, an email, or even a Netflix thumbnail—and split your audience randomly. Half your visitors see version A, while the other half sees version B. After collecting enough data, you check which one performs better.

Think of it like a taste test: one coffee made with almond milk, one with oat milk. Instead of guessing which your friends prefer, you let the results speak. That’s the power of A/B testing—turning hunches into evidence and giving businesses data-driven answers instead of gut-driven guesses.

The beauty of A/B testing is that it isolates a single variable, so you know exactly what caused the difference.

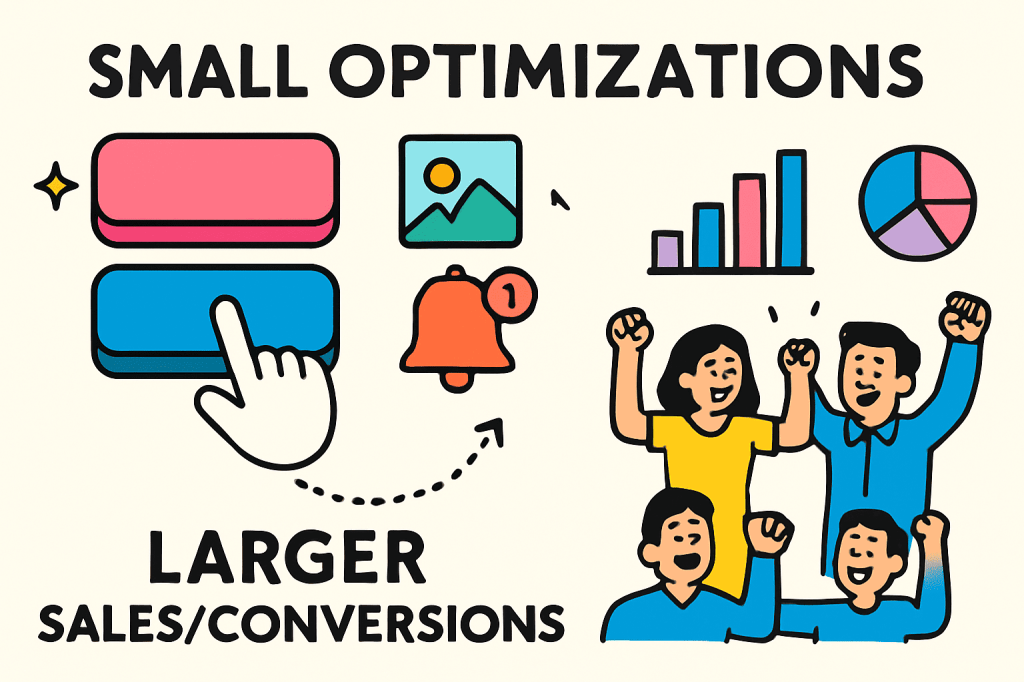

Small Tweaks, Big Wins

Here’s why businesses are obsessed with A/B testing: small tweaks, proven through A/B testing, can lead to massive wins. Even a 2% increase in conversion can translate to thousands of dollars for a company like Amazon.

- E-commerce: Does “Free Shipping” or “20% Off” drive more sales?

- Streaming apps: Which thumbnail gets more clicks?

- Food delivery: Does a push notification at 6 PM vs. 8 PM boost orders?

For a junior data analyst, this is where you get to flex. You’re not just cleaning spreadsheets—you’re running experiments that tell decision-makers, “This strategy works, and here’s the proof.” That’s powerful. It turns analytics from a rearview mirror into a roadmap for the future.

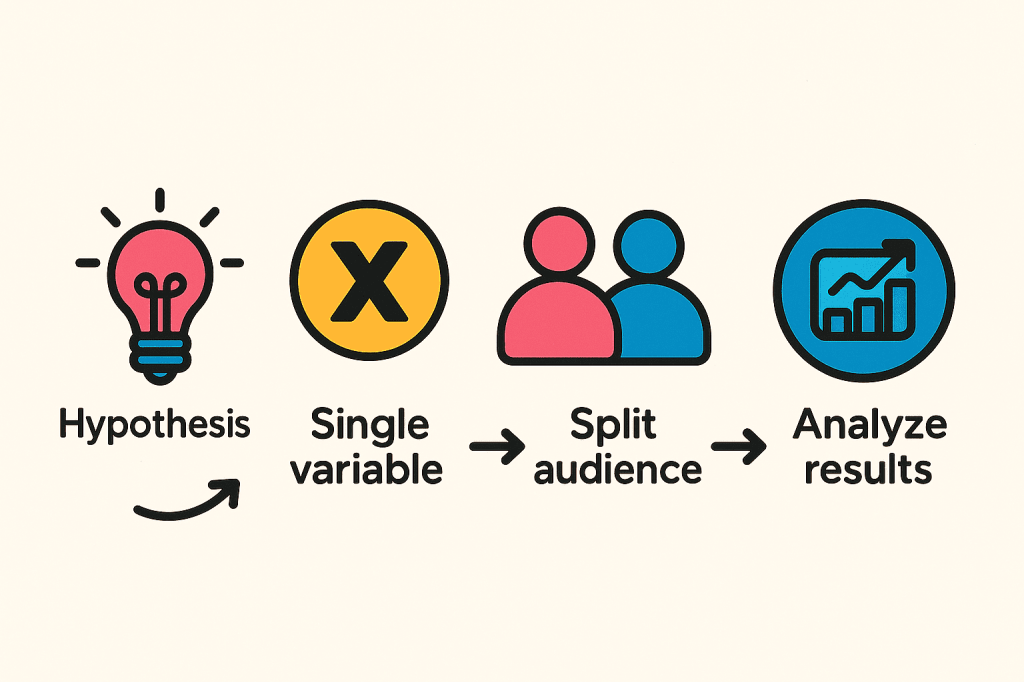

So how do you actually run a test that delivers these wins? Here’s a step-by-step guide:

Less Guessing, More Testing

Running an A/B test isn’t rocket science, but it does take discipline:

- Starting with a hypothesis: e.g., “A shorter sign-up form will increase completions.”

- Choosing a single variable: don’t test a button colour and a headline at the same time.

- Split your audience randomly into two testing groups.

- Run the test long enough so the data can speak for itself.

- Analyse results: Did your change actually work?

The trick? Resist the urge to celebrate too soon —real insights need time to shine.

Oops-Proof Your A/B Test

Even the best A/B tests can flop if you’re not careful. Here’s how to oops-proof yours:

- Don’t be impatient: calling a winner too soon is like judging a movie by the trailer.

- Keep it simple: testing five things at once? You’ll never know which tweak did the trick.

- Mind the outside world: The seasonal variations, holidays, ad campaigns, or random events can sneakily mess with your results.

As a data analyst, your job is part detective, part referee—making sure the experiment is fair, the data is clean, and the results actually mean something. Follow these tips, and you’ll turn potential flops into solid, trustworthy insights.

Wrapping It Up: Let Data Decide

Wrapping it up: vibes might be fun, but they won’t drive real results. A/B testing shows that even tiny experiments can spark big growth —and give data analysts a chance to prove their impact.

In business, the smartest decisions aren’t made on hunches or gut feelings—they’re made on evidence. And that evidence starts with something simple: version A versus version B. Let the data decide, and let your insights speak louder than guesses.

🚀 Ready to run your first A/B test? Start with one simple hypothesis and let the data surprise you!

Leave a comment